Childhood Illness Onset Produces Worse Outcomes

There is more evidence that childhood onset of bipolar illness means a more difficult course of illness. In a study published in World Psychiatry in 2012, Baldessarini et al. pooled data from 1,665 adult patients with bipolar I disorder at seven international sites and compared their family history of bipolar disorder, outcomes, and age of onset. Among these patients, 5% had onset in childhood (age <12 years), 28% during adolescence (12-18), and 53% during a peak period from age 15-25.

Patients who were younger at onset had more episodes per year, more co-morbidities, and a greater likelihood of a family history of the illness. Patients who were older at onset were more likely to have positive functional outcomes in adulthood, like being employed, living independently, and having a family.

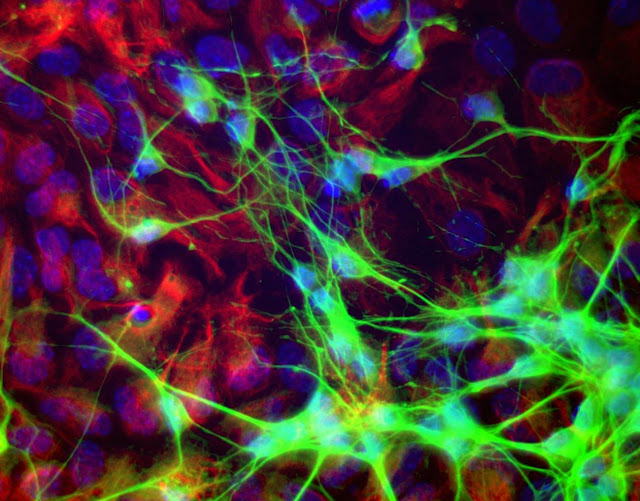

Glial, Not Neuronal, Deficits May Cause Depression

The brain consists of 12 billion neurons and four times as many glial cells. Neurons conduct electrical activity, and it is thought that changes in neural activity and synaptic activity (where neurons meet) underlie most behaviors. It was once thought that glia were just fluff, but new research shows that they may play a role in depression.

There are three types of glia: astrocytes, oligodendrocytes, and mico-glia. Researcher Mounira Banasr had previously shown that neuronal lesions in the prefrontal cortex of mice did not produce depressive-like behaviors, but glial lesions did.

In a new study presented at a recent scientific meeting, Banasr reported that destroying astrocytes in the prefrontal cortex of mice induced depressive- and anxiety-like deficits. Using a virus that specifically targeted astrocytes, the researchers documented that the depressive behavior was specifically related to loss of astrocytes and not loss of other glial cell types, such as oligodendrocytes or micro-glia.

Editor’s Note: There is evidence of glial abnormalities in patients with mood disorders. Banasr’s research raises the possibility that glial deficits (rather than neuronal alterations) could be crucially involved in depression. In this study, the depressive- and anxiety-like behaviors persisted for 8 days following the astrocyte ablation, but by day 14 the animals had recovered, possibly with the production of a new supply of astrocytes. These data also raise the possibility that targeting the mechanisms of glial dysfunction could be a new avenue to pursue in the therapeutic approaches to depression.

Long-term Treatment Response in Bipolar Illness

Willem Nolen, a researcher who has spent 40 years studying unipolar and bipolar disorder, recently retired from his position at Groningen Hospital in the Netherlands. In February, his retirement was celebrated with a symposium where he and other researchers discussed some of their important findings from the last several decades.

Nolen recently published a double-blind randomized study showing that in patients who were initially responsive to monotherapy with quetiapine (Seroquel), continuing the drug (at doses of 300-800mg/night) or switching to lithium were both more effective than switching to placebo over 72 weeks of long-term follow-up.

This study shows that quetiapine, which is only FDA-approved for long-term preventative treatment when used in combination with lithium or valproate (Depakote), also has efficacy when used as monotherapy.

Lithium is Highly Effective in Long-term Prevention

Nolen’s work also adds to an impressive amount of literature showing that lithium is highly effective in long-term prevention. This case is especially noteworthy because lithium was effective even in patients who had initially been selected for their response to quetiapine. (Studies that use this kind of “enriched sample” can only claim that quetiapine has long-term efficacy in those patients who initially respond well to the drug.) The data on lithium are even more impressive since the patients in this study were not enriched for lithium response.

Nolen has also conducted multiple studies of lithium, but optimal doses and target blood levels of the drug remain controversial. The therapeutic range of lithium is usually considered to be 0.6 to 1.2 meq/L, but some have argued that lower levels may still be effective. In a new analysis of those patients in the quetiapine study who were switched to lithium treatment, Nolen found that only lithium levels above 0.6 meq/L produced better results than placebo in long-term prophylaxis. Read more

Armodafinil: An Antidepressant For Bipolar I Depression

At a recent scientific meeting, researcher Joe Calabrese reported that armodafinil (Neuvigil), a drug that is FDA-approved for the treatment of narcolepsy, performed significantly better than placebo at producing antidepressant effects in bipolar depression when added to treatment with mood stabilizers. At a dose of 150mg, the drug behaved less like a psychomotor stimulant and more like a traditional antidepressant in that the antidepressant effects were delayed in onset. Stimulants have a rapid onset of action. Armodafinil was well-tolerated, not seeming to produce weight gain or switches into mania.

At a recent scientific meeting, researcher Joe Calabrese reported that armodafinil (Neuvigil), a drug that is FDA-approved for the treatment of narcolepsy, performed significantly better than placebo at producing antidepressant effects in bipolar depression when added to treatment with mood stabilizers. At a dose of 150mg, the drug behaved less like a psychomotor stimulant and more like a traditional antidepressant in that the antidepressant effects were delayed in onset. Stimulants have a rapid onset of action. Armodafinil was well-tolerated, not seeming to produce weight gain or switches into mania.

Editor’s Note: At the moment, quetiapine (Seroquel) is the only FDA-approved monotherapy for the treatment of bipolar depression. Lurasidone (Latuda) may soon be approved, as we reported in BNN Volume 16, Issue 2 from 2013. It now looks as though armodafinil could become the third approved agent for bipolar depression.

Quetiapine has efficacy in preventing depressive and manic recurrences both alone in monotherapy and in combination therapy with lithium or valproate. So far only the combination therapy is FDA-approved for preventative purposes. The data on the long-term effects of armodafinil and lurasidone are eagerly awaited, as they are a critical component of the treatment of bipolar depression.

Youth at High Risk for Bipolar Disorder Show White Matter Tract Abnormalities

At a recent scientific conference, researcher Donna Roybal presented research showing that children at high risk of developing bipolar disorder due to a positive family history of the illness had some abnormalities in important white matter tracts in the brain. Prior to illness onset, there was increased fractional anisotropy (FA), a sign of white matter integrity, but following the onset of full-blown bipolar illness there were decreases in FA.

At a recent scientific conference, researcher Donna Roybal presented research showing that children at high risk of developing bipolar disorder due to a positive family history of the illness had some abnormalities in important white matter tracts in the brain. Prior to illness onset, there was increased fractional anisotropy (FA), a sign of white matter integrity, but following the onset of full-blown bipolar illness there were decreases in FA.

Roybal postulated that these findings show an increased connectivity of brain areas prior to illness onset, but some erosion of the white matter tracts with illness progression.

Editor’s Note: It will be critical to replicate these findings in order to better define who is at highest risk for bipolar disorder so that attempts at prevention can be explored.

Selective Erasure Of Cocaine Memories In A Subset Of Amygdala Neurons

We have written before about the link between memory and both fear and addiction. At a recent scientific meeting, researchers discussed attempts to erase cocaine-cue memories in mice.

In articles published in Science in 2007 and 2009, Han et al. showed that about 20% of neurons in the lateral amygdala of mice were involved in the formation of a fear memory, and that selective deletion of these neurons could erase the fear memory. Using the same methodology, Josh Sullivan et al. identified neurons that were active in the mouse brain during cocaine conditioning. Amygdala activity showed that the mice preferred an environment where they received cocaine to an environment where they didn’t. The researchers noticed increased cyclic AMP, a messenger that led to increased production of calcium responsive element binding protein (CREB). When the researchers targeted the neurons in the lateral amygdala that were overexpressing CREB, they found that selective destruction of the overexpressing neurons disrupted the cocaine-induced place preference.

The research team further documented this effect by temporarily, rather than permanently, knocking out neuronal function. They could reversibly turn off neurons with an inert compound that promotes neuronal inhibition. Silencing the neurons that were overexpressing CREB before the conditioned place preference testing also limited cocaine-induced place preference memory.

Editor’s Note: While this type of intervention is not feasible in humans with cocaine addiction, these data do shed more light on the mechanisms behind cocaine conditioning.

We have written before that if extinction training to break a cocaine habit or neutralize a learned fear is performed within the brain’s memory reconsolidation window (five minutes to one hour after memory recall), it can induce long-lasting alterations in cocaine craving or conditioned fear.

It is possible that properly timed extinction of cocaine- or fear-conditioned memories might work similarly to the selective silencing of neurons that was carried out in the mice using a drug that inhibited CREB-activated neurons. Determining the commonalities between these ways of eliminating conditioned memories could lead to a whole new set of psychotherapeutic approaches to anxiety disorder, addictions, and other pathological habits.

The Amygdala Plays a Role in Habitual Cocaine Seeking

At a recent scientific meeting, Jennifer E. Murray et al. presented findings about the amygdala’s role in habitual cocaine seeking. The amygdala is the part of the brain that makes associations between a stimulus and a response. When a person begins using cocaine, a signal between the amygdala and the ventral striatum (also known as the nucleus accumbens), the brain’s reward center, creates a pleasurable feeling for the person. The researchers found that in mice who have learned to self-administer cocaine, as an animal progresses from intermittent use to habitual use, the amygdala connections shift away from the ventral striatum toward the dorsal striatum, a site for motor and habit memory. If amygdala connections to the dorsal striatum are severed, the pattern of compulsive cocaine abuse does not develop.

At a recent scientific meeting, Jennifer E. Murray et al. presented findings about the amygdala’s role in habitual cocaine seeking. The amygdala is the part of the brain that makes associations between a stimulus and a response. When a person begins using cocaine, a signal between the amygdala and the ventral striatum (also known as the nucleus accumbens), the brain’s reward center, creates a pleasurable feeling for the person. The researchers found that in mice who have learned to self-administer cocaine, as an animal progresses from intermittent use to habitual use, the amygdala connections shift away from the ventral striatum toward the dorsal striatum, a site for motor and habit memory. If amygdala connections to the dorsal striatum are severed, the pattern of compulsive cocaine abuse does not develop.

Editor’s Note: These data indicate that the amygdala is involved in cocaine-related habit memory, and that the path of activity shifts from the ventral to the dorsal striatum as the cocaine use becomes more habit-based—automatic, compulsive, and outside of the user’s awareness.

As we’ve reviewed before, the amygdala also plays a role in context-dependent fear memories, such as those that occur in post-traumatic stress disorder (PTSD). The process of retraining a person with PTSD to view a stimulus without experiencing fear is called extinction training. A study by Agren et al. published in Science in 2012 demonstrated that when extinction training of a learned fear took place within the brain’s memory reconsolidation window (five minutes to one hour after active memory recall), the training was sufficient to not only “erase the conditioned fear memory trace in the amygdala, but also decrease autonomic evidence of fear as revealed in skin conductance changes in volunteers.”

The preclinical data presented by Murray and colleagues suggest the possibility that amygdala-based habit memory traces could also be revealed via functional magnetic resonance imaging (fMRI) in subjects with cocaine addiction. Attempts at extinction of cocaine craving, if administered within the memory reconsolidation window, might likewise be able to erase the cocaine addiction/craving memory trace, as Xue et al. reported in Science in 2012.

‘Bath Salts’ Ingredient Worse than Cocaine, and 10 Times More Powerful

Between 2010 and 2011, reports about the recreational drug commonly known as “bath salts” skyrocketed, and its use has been connected with a range of serious consequences including heart attack, liver failure, prolonged psychosis, suicide, violence, and even cannibalism. The drug, which is distinct from actual bath salts and sometimes goes by other names such as “plant food,” contains synthetic cathinones, which have effects that resemble those of amphetamines and cocaine. (Natural cathinone is derived from the plant Khat, which some cultures, particularly in the Horn of Africa and the Arabian Peninsula, have used socially for its euphoric effects when chewed.)

Between 2010 and 2011, reports about the recreational drug commonly known as “bath salts” skyrocketed, and its use has been connected with a range of serious consequences including heart attack, liver failure, prolonged psychosis, suicide, violence, and even cannibalism. The drug, which is distinct from actual bath salts and sometimes goes by other names such as “plant food,” contains synthetic cathinones, which have effects that resemble those of amphetamines and cocaine. (Natural cathinone is derived from the plant Khat, which some cultures, particularly in the Horn of Africa and the Arabian Peninsula, have used socially for its euphoric effects when chewed.)

Synthetic cathinones are not well understood, but a few studies published in the journal Neuropsychopharmacology this year provided some preliminary findings. The most common synthetic cathinone found in the blood and urine of patients admitted to emergency rooms in the US after taking bath salts is 3,4-methylenedioxypyrovalerone, or MDPV.

In a study of rats by Baumann et al., MDPV was found to have a strong blocking effect on uptake of the neurotransmitters dopamine and norepinephrine, while having only weak effects on uptake of serotonin. MDPV’s ability to inhibit the clearance of dopamine from cells is similar to cocaine’s actions, but MDPV is much more potent and effective at this. MDPV was also 10 times more potent than cocaine at producing physical symptoms in the rats, such as motor activation, tachycardia (irregular heart beat), and hypertension (high blood pressure).

Another study of rats by Fantegrossi et al. found that rats that received MDPV experienced motor stimulation that was potentiated by being in a warm environment. When the rats received relatively high doses in this environment, they engaged in profound stereotypy (repetitive movement) and self-injurious behavior such as skin-picking or chest-biting. This study also found similarities in the internal effects of MDPV and MDMA (ecstasy) and methamphetamine.

Ecstasy Use Increases Serotonin Receptors in Women

MDMA, better known as the drug ecstasy, has been found to reduce serotonin axons in animals. A small study by Di Iorio et al. published in the Archives of General Psychiatry in 2012 suggests that the drug also has detrimental effects on serotonin signaling in humans.

The researchers used positron emission tomography (PET) scans to identify serotonin receptors in the brains of 10 women who had never used ecstasy and 14 who had used the drug at least five times before and then abstained for at least 90 days. The team found significantly greater cortical serotonin2A receptor nondisplaceable binding potential (serotonin2ABPND, an indicator of serotonin receptors) in abstaining MDMA users than in those women who had never used the drug.

The increase in serotonin receptors observed in these ecstasy users could be a sign of chronic serotonin neurotoxicity. Loss of serotonin nerve terminals decreases serotonin levels and secondarily results in the production of more serotonin receptors. Thus, one explanation for the receptor increase is that it is prompted by the decrease in serotonin transmission that MDMA is known to cause.

The higher levels of serotonin2ABPND were found in several regions of the MDMA users’ brains: occipital-parietal, temporal, occipito-temporal-parietal, frontal, and frontoparietal. Lifetime use of the drug was associated with serotonin2ABPND in the frontoparietal, occipitotemporal, frontolimbic, and frontal regions. There were no regions in which the MDMA users had lower levels of receptors than women in the control group. The duration of the ecstasy users’ abstinence from using the drug had no effect on levels of serotonin2ABPND observed, suggesting that the effects might be long-lasting, if not permanent.

Editor’s Note: Given serotonin’s importance in brain function and the drug’s popularity for recreational use, this finding has implications both for ecstasy users and for research on serotonin signaling. Ecstasy is supposed to be a “love drug,” but people should show their serotonin nerve terminals some love and look after them by avoiding the drug.

Even Short-Term Recreational Use Of Ecstasy Causes Deficits In Visual Memory

German researchers have found that MDMA (ecstasy) users who took more than 10 pills in a one-year period showed deficits in visual memory. Wagner et al. published the study in the journal Addiction in 2012.

In tests where participants were trained to associate certain words with certain images and then recall one in response to the other, those who had taken ecstasy at least ten times the previous year showed deterioration in both their immediate and delayed recall skills.

Given the role of the hippocampus in relational memory, the researchers suspect that there is a relationship between ecstasy use and hippocampal dysfunction.

Editor’s Note: This is the most definitive study on this subject so far because it observed new users before and after they had used ecstasy for at least 10 times in one year (unlike many retrospective studies that observed participants only after they had been using the drug for some time, so it was impossible to know if they had pre-existing memory problems).

Other data in animals and humans suggest that ecstasy burns out the terminals of serotonergic neurons and thus causes brain damage. It now appears this damage to the brain and memory can occur even during short-term or casual ecstasy use.